There is no objection in saying that Classification is one of the most popular Machine learning problems across the entirety of Data Science and Machine Learning. We humans have been so fixated on making machines learn to classify and categorize things, whether it be images, symbols or whatever form that data can take.

Artificial Neural Networks or shortly ANN’s are widely used today in many applications and, classification is one of them and also there are many libraries and frameworks that are dedicated to building Neural Networks with ease. Most of these frameworks and tools, however, require many lines of code to implement when compared to a simple library from Scikit-Learn that we are going to learn now.

In this article, we will discuss one of the easiest to implement Neural Network for classification from Scikit-Learn’s called the MLPClassifier.

Before we begin, make sure to check out MachineHack’s latest hackathon- Predicting The Costs Of Used Cars – Hackathon By Imarticus Learning.

MLPClassifier vs Other Classification Algorithms

MLPClassifier stands for Multi-layer Perceptron classifier which in the name itself connects to a Neural Network. Unlike other classification algorithms such as Support Vectors or Naive Bayes Classifier, MLPClassifier relies on an underlying Neural Network to perform the task of classification.

One similarity though, with Scikit-Learn’s other classification algorithms is that implementing MLPClassifier takes no more effort than implementing Support Vectors or Naive Bayes or any other classifiers from Scikit-Learn.

Implementing MLPClassifier With Python

Once again we will rely on our favourite hackathon platform MachineHack for the dataset we are going to use in this coding walkthrough.

To get the dataset, head to MachineHack, sign up and select the Predict The Data Scientists Salary In India Hackathon. Go ahead and start the hackathon, you can download the datasets in the assignments page of the hackathon.

To start with we will be only using a small part of the dataset provided at MachneHack to make it simple. We have also covered most of the steps in detail in one of the previous tutorials on implementing Naive Bayes Classifier.

Let code!

Importing the Dataset

import pandas as pddata = pd.read_csv("Final_Train_Dataset.csv")data = data[['company_name_encoded','experience', 'location', 'salary']]

The above code block will read the dataset into a data-frame. Also, we will stick will only a few selected features from the dataset ‘company_name_encoded’, ‘experience’, ‘location’ and ‘salary’.

Cleaning The Data

Now let us clean up the data.

Splitting the ‘experience’ column into two numerical columns of minimum experience and maximum experience.

#Cleaning the experienceexp = list(data.experience)min_ex = []max_ex = []

for i in range(len(exp)): exp[i] = exp[i].replace("yrs","").strip() min_ex.append(int(exp[i].split("-")[0].strip())) max_ex.append(int(exp[i].split("-")[1].strip()))

#Attaching the new experiences to the original datasetdata["minimum_exp"] = min_exdata["maximum_exp"] = max_ex

Encoding the textual data in ‘location’ and ‘salary’ columns

#Label encoding location and salaryfrom sklearn.preprocessing import LabelEncoderle = LabelEncoder()data['location'] = le.fit_transform(data['location'])data['salary'] = le.fit_transform(data['salary'])

We will now delete the original experience column and reorder the data-frame.

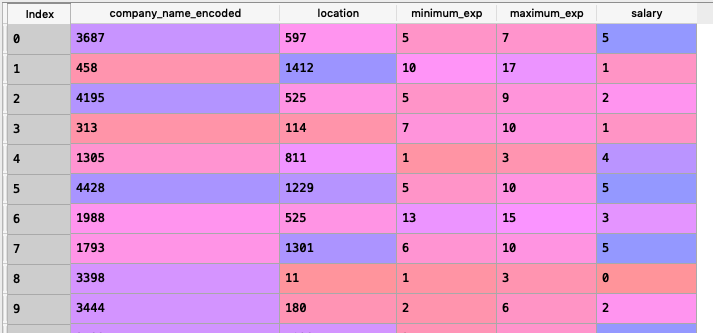

#Deleting the original experience column and reordering data.drop(['experience'], inplace = True, axis = 1)data = data[['company_name_encoded', 'location','minimum_exp', 'maximum_exp', 'salary']]

After executing all the above code blocks our new dataset will look like this:

Feature Scaling

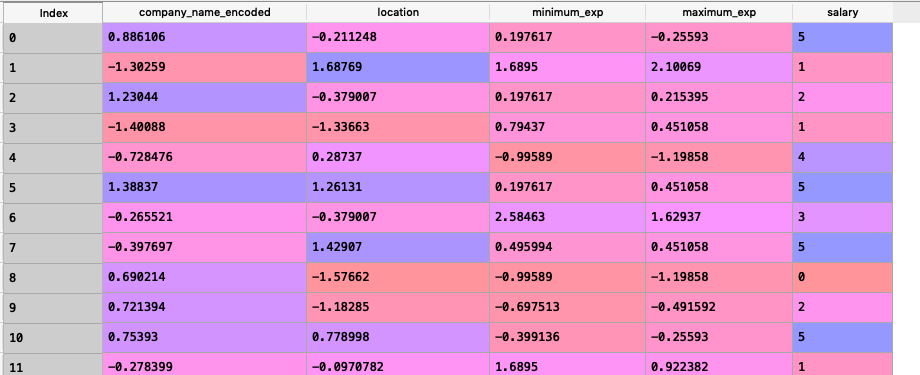

Scaling all the numerical features in the dataset.

Note:

‘Salary’ column is not feature scaled as it consists of the labelled classes and not numerical values.

from sklearn.preprocessing import StandardScalersc = StandardScaler()data[['company_name_encoded', 'location', 'minimum_exp', 'maximum_exp']] = sc.fit_transform(data[['company_name_encoded', 'location', 'minimum_exp', 'maximum_exp']])

After scaling the dataset will look like what is shown below :

Creating training and validation sets

#Splitting the dataset into training and validation setsfrom sklearn.model_selection import train_test_splittraining_set, validation_set = train_test_split(data, test_size = 0.2, random_state = 21)

#classifying the predictors and target variables as X and YX_train = training_set.iloc[:,0:-1].valuesY_train = training_set.iloc[:,-1].valuesX_val = validation_set.iloc[:,0:-1].valuesy_val = validation_set.iloc[:,-1].values

The above code block will generate predictors and targets which we can fit our model to train and validate.

Measuring the Accuracy

We will use the confusion matrix to determine the accuracy which is measured as the total number of correct predictions divided by the total number of predictions.

def accuracy(confusion_matrix): diagonal_sum = confusion_matrix.trace() sum_of_all_elements = confusion_matrix.sum() return diagonal_sum / sum_of_all_elements

Building the MLPClassifier

Finally, we will build the Multi-layer Perceptron classifier.

#Importing MLPClassifierfrom sklearn.neural_network import MLPClassifier

#Initializing the MLPClassifierclassifier = MLPClassifier(hidden_layer_sizes=(150,100,50), max_iter=300,activation = 'relu',solver='adam',random_state=1)

- hidden_layer_sizes : This parameter allows us to set the number of layers and the number of nodes we wish to have in the Neural Network Classifier. Each element in the tuple represents the number of nodes at the ith position where i is the index of the tuple. Thus the length of tuple denotes the total number of hidden layers in the network.

- max_iter: It denotes the number of epochs.

- activation: The activation function for the hidden layers.

- solver: This parameter specifies the algorithm for weight optimization across the nodes.

- random_state: The parameter allows to set a seed for reproducing the same results

After initializing we can now give the data to train the Neural Network.

#Fitting the training data to the networkclassifier.fit(X_train, Y_train)

Using the trained network to predict

#Predicting y for X_valy_pred = classifier.predict(X_val)

Calculating the accuracy of predictions

#Importing Confusion Matrixfrom sklearn.metrics import confusion_matrix#Comparing the predictions against the actual observations in y_valcm = confusion_matrix(y_pred, y_val)

#Printing the accuracyprint("Accuracy of MLPClassifier : '', accuracy(cm))

Closing Note

On executing the above code blocks you will get a pretty low score around the range of 40% to 45%. For a first timer, it’s a decent start, however, the model can be tweaked and tuned to improve the accuracy.

All fellow Data Science enthusiasts are encouraged to try this problem, post your scores in the comment and also submit your solutions at MachineHack. Keep updated with MachineHack, take part in our exciting new hackathons and win exciting prizes.

Popular Guides