Recently, developers from Google Research teamed up with the University of Tokyo to introduce Deployment Efficiency and a model-based algorithm known as Behavior-Regularised Model-ENsemble (BREMEN). The algorithm is said to have the capability to optimise an effective policy offline using much lesser data.

Reinforcement Learning is one of the most trending techniques that have been used by a number of domains including robotics, operations research, medicine, autonomous driving and more. The technique has recently gained impressive success in learning behaviours for a number of sequential decision-making tasks.

Behind the Model

According to the researchers, most of the Reinforcement Learning algorithms assume online access to the environment because assuming online access one can interleave updates to the policy with experience collection using that policy. However, doing so, the cost or potential risk of deploying a new data-collection policy is high and it can also become prohibitive to update the data-collection policy more than a few times during learning.

The researchers stated that if a task can be learned with a small number of data collection policies, then the costs, as well as risks, can be substantially reduced during the process. This is the reason behind developing a novel measure of RL algorithm performance, known as Deployment Efficiency. The deployment efficiency counts how many times the data-collection policy has been changed during improvement from random policy to solve the task.

In order to develop an algorithm that is both sample-efficient and deployment efficient, each iteration of the algorithm between successive deployments has to work effectively on much smaller dataset sizes. However, using smaller datasets, the model cannot predict properly and results in poorer performance or can be said as extrapolation errors.

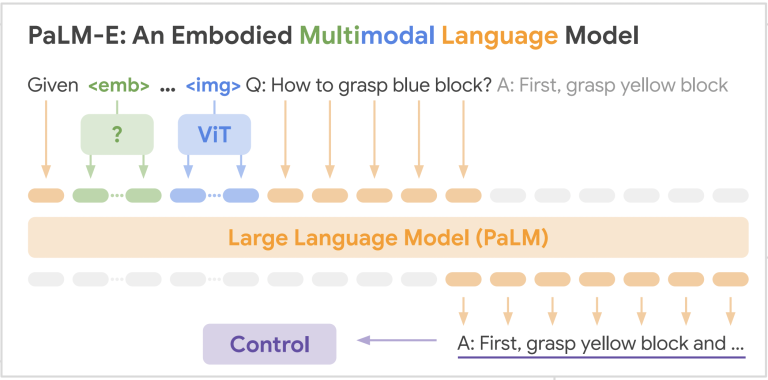

In order to better approach these problems arising in limited deployment settings, the researchers further proposed Behavior-Regularized Model-ENsemble (BREMEN). BREMEN learns an ensemble of dynamics models in conjunction with a policy using imaginary rollouts while implicitly regularising the learned policy via appropriate parameter initialisation and conservative trust-region learning updates.

The BREMEN model incorporates Dyna-style model-based RL, which learns an ensemble of dynamics models in combination with a policy using the imaginary rollouts from the ensemble as well as behaviour regularisation via conservative trust-region updates.

For the baseline methods, the researchers used the open-source implementations of Soft-Actor-Critic (SAC), BC, BCQ, and Behaviour Regularised Actor-Critic (BRAC). They also used Adam as the optimiser, which is an algorithm for first-order gradient-based optimisation of stochastic objective functions.

Evaluating BREMEN

The researchers evaluated BREMEN on high-dimensional continuous control benchmarks and found out that it achieves impressive deployment efficiency results. The model-based algorithm is able to learn successful policies with only 5-10 deployments, while significantly surpassing the existing off-policy and offline reinforcement learning algorithms in the deployment-constrained setting.

The researchers further evaluated BREMEN on standard offline Reinforcement Learning benchmarks, where only a single static dataset is used. To this, the researchers found out that BREMEN can not only achieve performance competitive with state-of-the-art when using standard dataset sizes but also learn with 10-20 times smaller datasets, which previous methods are unable to attain.

Wrapping Up

In this work, the researchers introduced deployment efficiency, which is a novel measure for reinforcement learning performance that counts the number of changes in the data-collection policy during learning. To enhance the deployment efficiency, they proposed Behavior-Regularised Model-ENsemble (BREMEN), which is a model-based offline algorithm with implicit KL regularisation via appropriate policy initialisation and trust-region updates.

Read the paper here.