Last year, we compiled a list of top chips for accelerating ML tasks. We talked about the rising demand of AI-based systems on Chips and the year 2020 is no different — the trend continued. While few chipmakers capitalised on this trend, chip giants like Intel had to undergo a tough period. They even had to sell their NAND division to South Korean chipmaker SK Hynix.

Even Apple announced their separation from Intel processors and opened a new chapter of Apple Silicon. Smartphones, data centres, home pods are few of the many applications of custom made AI chips. Companies are increasingly moving towards using dedicated cores for handling AI tasks on their chips.

The need for more ASICs is expected to rise as artificial intelligence gains more ground in global technology. Companies like Amazon. and Google are already en route to fortifying their server silicon endeavours. Whereas, NVIDIA– current market leader– is giving a tough time for Intel and AMD.

In this article, we take a look at chips/accelerators that made their presence felt in the year 2020.

A14 Bionic

Apple has recently decided to use their own chips for their devices. This decision was backed up by their high-performance Bionic chip series that are proving to be powerhouses of ML tasks. At their latest event ‘Time Flies’, Apple introduced the A14 Bionic chip, a 5 nm chipset in their iPad Air devices making it the world’s first device to operate on a 5nm chip. According to Apple, A14 Bionic houses 11.8 billion transistors thanks to the breakthrough 5-nanometer process technology. This enables the devices to edit 4K videos, create art, play immersive games, and more.

A14 also features a new 6-core design and a 4-core new graphics architecture along with a new 16-core Neural Engine. This makes the chips 2x faster than its predecessor and is capable of performing up to 11 trillion operations per second.

Just like in A13, the combination of the new Neural Engine, CPU machine learning accelerators and high-performance GPU enables A14 to deliver powerful on-device experiences for image recognition, natural language learning, analysing motion, and more.

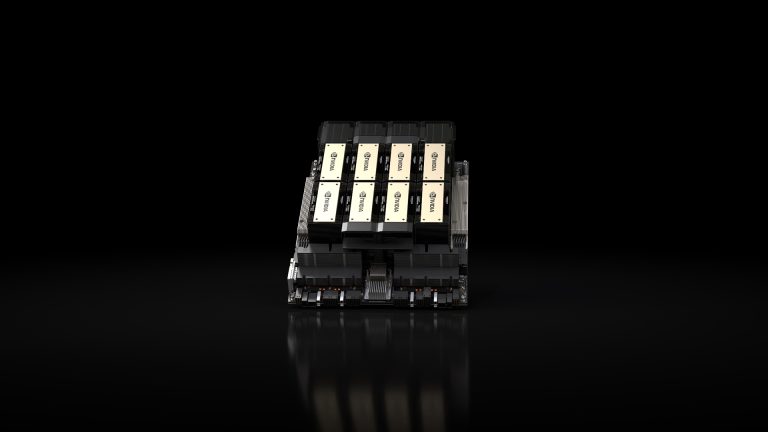

NVIDIA A100

With rapid innovation and frequent new releases, NVIDIA is currently leading the global AI chip market. The latest addition to its incredible line up of processors is the all-new NVIDIA A100, which incorporates building blocks across hardware, networking, software, libraries, and optimised AI models and applications from NGC. NGC is the hub for GPU-optimised software for ML tasks. The NVIDIA A100 GPU claims to offer 6x higher performance than NVIDIA’s previous generation Volta architecture for training and 7x higher performance for inference.

Intel Habana Gaudi

At the ongoing AWS flagship event re:Invent, AWS CEO Andy Jassy announced that they would be leveraging up to eight Habana Gaudi accelerators that can deliver up to 40% better price-performance than current GPU based EC2 instances for machine learning workloads. Gaudi is developed by Habana labs, which was acquired by Intel last year. Gaudi accelerators are custom made for training natural language processing, object detection, recommendation and other ML applications.

AWS Inferentia

Since we launched EC2 Inf1 instances powered by #AWS Inferentia, customers like @Snapchat, @CondeNast, & @AnthemBCBS have used Inf1 for #ML inference at lower cost. Now #Alexa runs the majority of its inference workloads on Inf1, as well. https://t.co/YiyEXD776T

— Andy Jassy (@ajassy) November 14, 2020

Amazon has made its silicon ambitions obvious as early as 2015. As a result, they have launched custom-built AWS Inferentia chips for leading the hardware specialisation department. Inferentia’s performance convinced AWS to deploy them for their popular Alexa services which require state of the art ML for speech processing and other tasks. The custom-built AI chip, Inferentia contains four NeuronCores that are equipped with a large on-chip cache to cut down on external memory accesses and reduce latency for accelerating typical deep learning operations such as convolution and transformers.

AWS Trainium

Followed by AWS Inferentia’s success in providing customers with high-performance ML inference at the lowest cost in the cloud, AWS is launching Trainium to address the shortcomings of Inferentia. With both Trainium and Inferentia, stated AWS, customers will have an end-to-end flow of ML compute from scaling training workloads to deploying accelerated inference.

AWS Trainium offers the highest performance with the most teraflops (TFLOPS) of compute power for ML in the cloud and enables a broader set of ML applications. The Trainium chip is specifically optimised for deep learning training workloads for applications including image classification, semantic search, translation, voice recognition, natural language processing and recommendation engines.

AWS Trainium shares the same AWS Neuron SDK as AWS Inferentia, making it easy for developers using Inferentia to get started with Trainium. Neuron SDK is integrated with popular ML frameworks, and developers can easily migrate to AWS Trainium from GPU-based instances with minimal code changes.

Cloud AI 100

Qualcomm recently announced their Cloud AI 100 accelerator, that uses advanced signal processing and cutting-edge power efficiency to support AI solutions for multiple environments, including the data centre, cloud edge, edge appliance, and 5G infrastructure.

Mozart AI

SimpleMachines team includes leading research scientists and industry heavyweights formerly of Qualcomm, Intel and Sun Microsystems – has created a first-of-its-kind easily programmable, high-performance chip that will accelerate a wide variety of AI and machine-learning applications. The chip, Mozart, is a TSMC 16-nanometer design that utilises HBM2 memory and is sampling as a standard PCIe card. Initial results have shown significant speedups across a broad set of AI applications, unlike other specialised AI chips. Examples include recommendation engines, speech and language processing, and image detection, all of which can run simultaneously.

IBM POWER10

In August, IBM rolled out the next-gen IBM POWER10 chip. This is IBM’s first commercialised 7nm processor and claims to be three times more efficient than the previous models of the POWER CPU series.

It is expected to be available in the second half of 2021 and will be manufactured by Samsung. IBM Research has been partnering with Samsung Electronics on research and development for more than a decade, including demonstration of the semiconductor industry’s first 7nm test chips through IBM’s Research Alliance.

CV28M

Known for its computer vision chips for security cameras, Ambarella has recently launched their new CV28M camera system on chip (SoC) as part of its CVflow family. It combines advanced image processing power along with high-resolution video encoding, and computer vision processing in a single, low-power design. Ambarella CV28M is designed to perform computer vision applications at the edge. This chip, claims Ambarella, can be used for autonomous vehicles, smart cameras and drones.

KL720

Kneron, backed by several companies like Qualcomm, Sequoia Capital as well as Alibaba, launched its neural processing unit KL720 to meet the increasing demands of on-device machine learning. Powered by ARM Cortex M4 design, KL720 clocks 0.9TOPS per watt, making it the highest performance to power ratio in the market. Kneron claims that their NPU can offer seamless processing of 4K resolution images, full high definition videos, and 3D sensing for fool-proof facial recognition and more.

Sapeon X220

The Sapeon X220, unveiled recently by South Korea’s SK Telecom is designed to speed up servers that cater to a growing number of mobile devices that perform better with AI.

According to SK Telecom, Sapeon is the first Korean ASIC for data centres to be commercialized and hints at Korea’s ambitions to expand beyond its traditional mobile and broadband businesses and keep up in an era of AI-driven innovation. This news comes after SK Hynix announced that they would be buying Intel’s reputed NAND business for a whopping $9 billion.

(Note: The above list is no particular order. If you think we have missed out on a few, let us know in the comments. We have also compiled top chips to look forward to in 2021. Link below)