|

Listen to this story

|

“If you had asked AI experts what an LLM was before [the launch of ChatGPT in] 2022, many probably would have answered that it’s a law degree,” quipped Oliver Molander in his post on LinkedIn, adding how many find it extremely difficult to accept that AI is much more than just LLMs and text-to-video models.

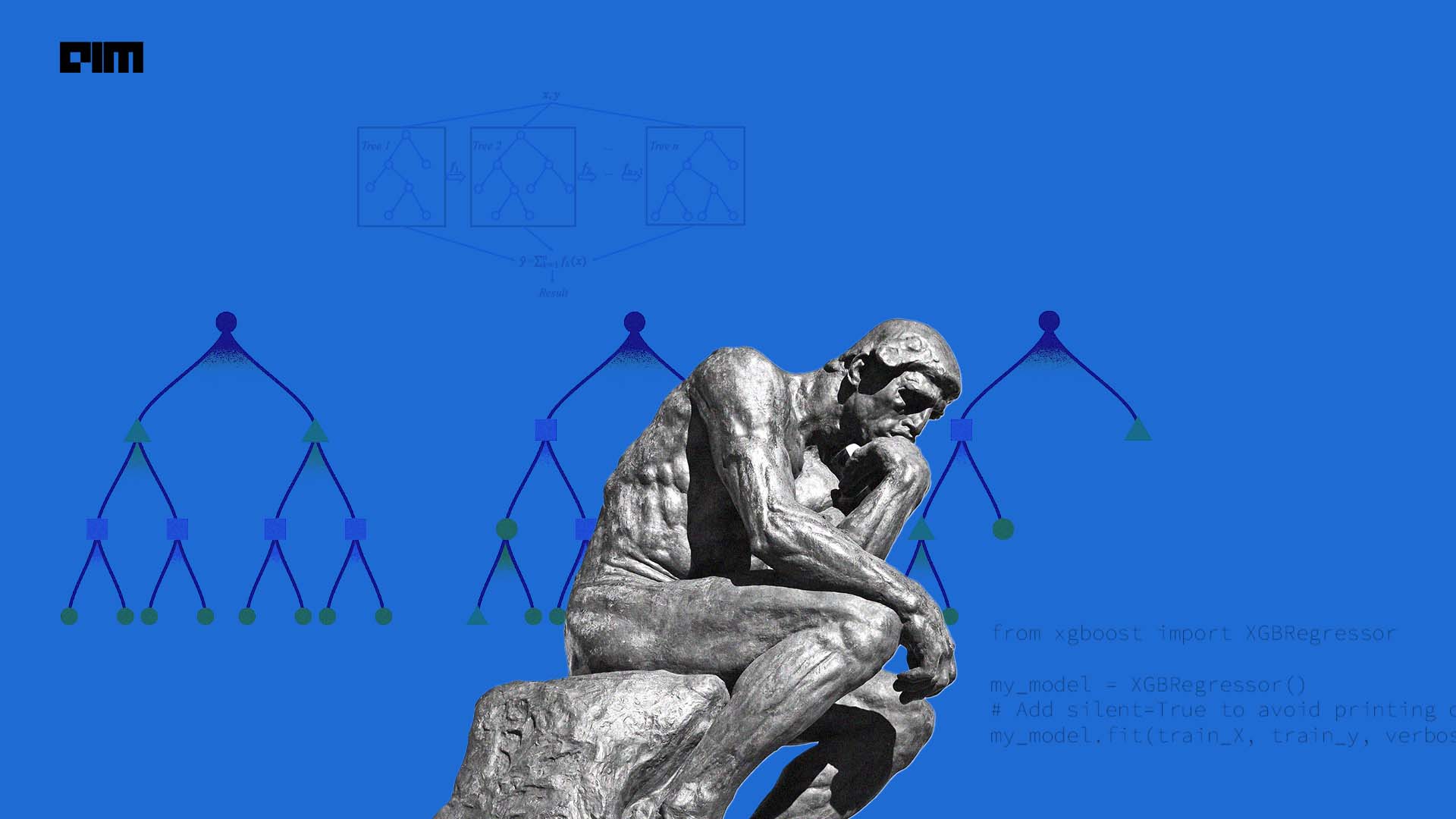

And the real winner, when it comes to tabular data and making sense of sheets, is XGBoost (aka Extreme Gradient Boosting). It excels on all fronts, amid all the hype around other deep learning techniques, even LLMs, or the recent one Retrieval Augment Generation (RAG). XGBoost 2.0, launched in October last year, made it perform even better on several new classifications.

Though techniques like XGBoost, deep learning, or RAG, are not directly comparable, their functions are the same – to retrieve, make sense of information, and generate outputs.

Many such cases.

— Bojan Tunguz (@tunguz) March 10, 2024

Reject FOMO, embrace tradition. https://t.co/1b3rW5kRVK

Heard of the New XGBoost LLM?

Despite the advancements in generative AI and the proliferation of LLMs, the practical utility of XGBoost remains unparalleled, particularly in domains reliant on tabular datasets. XGBoost’s interpretability, efficiency, and robustness make it indispensable for applications ranging from finance to healthcare.

The hype around LLMs and RAG techniques has made people forget about the importance of other ML techniques, such as XGBoost. VCs are so hell-bent on hopping onto the GenAI and LLM bandwagon that every new terminology is often mislabelled as a new type of LLM.

But in reality, a huge chunk of the return of investment is concentrated around predictive ML techniques and techniques such as XGBoost and Random Forest. The majority of business use cases out there for AI/ML are done with proprietary tabular business data.

When dealing with tabular datasets, efficiency is paramount. XGBoost’s versatility extends beyond classification to regression and ranking tasks. Whether you need to predict a continuous target variable, rank items by relevance, or classify data into multiple categories, XGBoost can handle it with ease.

XGBoost’s interpretability, efficiency, and versatility make it the preferred choice for many predictive modelling endeavours, particularly those reliant on tabular data. Conversely, the evolving capabilities of LLMs and the augmentative potential of RAG offer tantalising prospects for knowledge-intensive applications.

RAG is Too Good, But Not so Much

A study conducted in July 2022, analysing 45 mid-sized datasets, revealed that tree-based models such as XGBoost and Random Forests continued to exhibit superior performance compared to deep neural networks when applied to tabular datasets.

RAG burst onto the scene in 2020 when the brainiacs at Meta AI decided to jazz up the world of LLMs. It’s a game-changer. Designed to give LLMs the much-needed information techniques, RAG swooped in to fix the problems that haunted its predecessors – the dreaded hallucinations.

With RAG, customers can add another dataset, and give the LLM fresh information to generate the answer from. Some call this “fancier prompt engineering”. This is what enterprises need to generate insights from their own data. But even then, this technique has not completely fixed the hallucination issue within LLMs, but arguably made it even worse since people started trusting these models even more.

However, the deployment of RAG is not without its challenges, particularly those concerning data privacy and security. Instances of prompt injection vulnerabilities underscore the need for robust safeguards in leveraging RAG-enabled models.

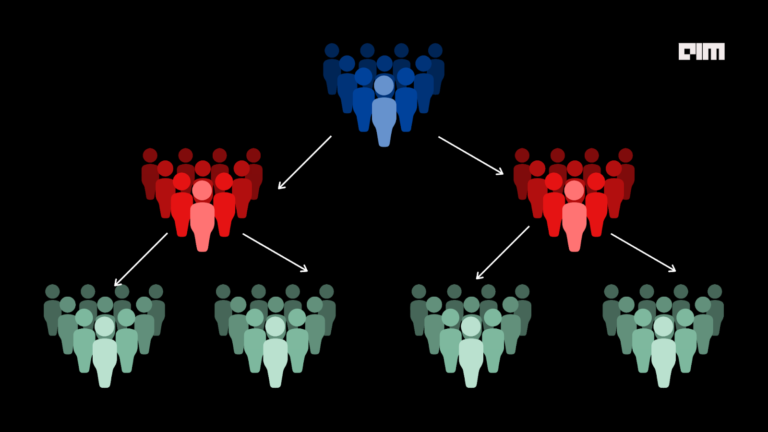

Traditionally, there have been two distinct groups in the ML ecosystem: the tabular-data-focused data scientists who use XGBoost, lightBGM, and similar tools, and the LLM group. Both these groups have used separate techniques and models. “I have always been a big fan of XGBoost! There was a time, I was more of an XGBoost modeller than an ML modeller,” said Damein Benveniste from The AiEdge on LinkedIn.

Everyone is focussed on developing LLMs.

— Marc (@marccodess) August 29, 2023

The LLMs produce textual output, but the focus here is on using the internal embeddings (latent structure embeddings) generated by LLMs, which can be passed to traditional tabular models like XGBoost. While Transformers have undoubtedly revolutionised generative AI, their strengths lie in unstructured data, sequential data, and tasks that involve complex patterns.

Krishna Rastogi, CTO of MachineHack said, “Transformers are like the H-bombs of machine learning, and XGBoost is the reliable sniper rifle. When it comes to tabular data, XGBoost proves to be the sharpshooter of choice.”